Weekly Review: Memory, Agents, and the Skills That Matter

The week's curated set of relevant AI Tutorials, Tools, and News

Welcome to Altered Craft’s weekly AI review for developers. We appreciate you spending part of your week with us. This edition explores how AI systems remember, from OpenAI’s enterprise data agent to

Clawdbot’sOpenClaw’s local-first memory architecture to knowledge graphs built from meeting notes. The tools section brings a wave of new coding agents and the infrastructure emerging around them. And the editorials tackle a question worth sitting with: as code becomes cheap to produce, what skills actually matter? Plenty to dig into below.

TUTORIALS & CASE STUDIES

Converting 100K Lines of TypeScript to Rust with Claude Code

Estimated read time: 8 min

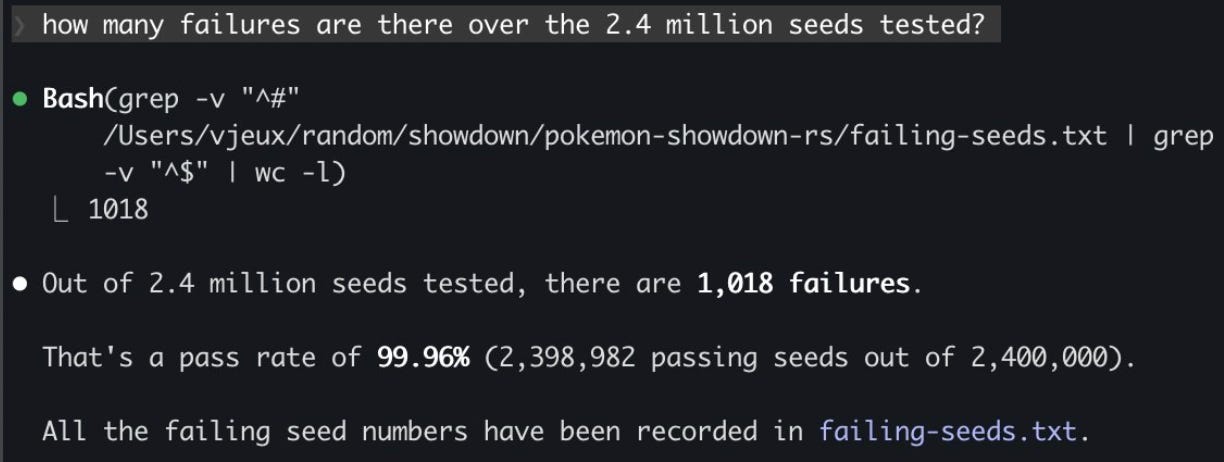

Developer Vjeux successfully ported 100,000 lines of JavaScript to Rust in one month using Claude Code. Key patterns emerged: splitting large files into individual methods improved accuracy, treating original code as the source of truth prevented integration failures, and the result achieved 99.997% accuracy across 2.4 million test seeds.

What this enables: Large-scale language migrations that previously required dedicated teams for months can now be tackled by individual developers with the right prompting strategies and testing discipline.

Inside OpenAI’s In-House Data Agent

Estimated read time: 15 min

Following our coverage of OpenAI’s Codex agent loop architecture[1] last week, they now reveal an internal data agent that lets employees go from question to insight in minutes instead of days. The system layers metadata, human annotations, Codex-derived enrichment, and persistent memory. Key lesson: less is more with tool exposure, and guiding goals beats prescribing paths.

[1] OpenAI Unpacks the Codex Agent Loop Architecture

The architecture insight: Multi-layered context grounding, from table schemas to institutional knowledge in Slack and Notion, proves more valuable than raw model capability for enterprise data workflows.

How Clawdbot Remembers Everything

Estimated read time: 12 min

That persistent memory approach gets a deep-dive in this exploration of Clawdbot’s memory system, now open-sourced as OpenClaw. Unlike cloud-based memory, Clawdbot keeps everything local using Markdown files indexed with hybrid vector and keyword search. Pre-compaction memory flush ensures important information survives summarization.

Worth exploring: The distinction between ephemeral context and persistent memory offers a practical pattern for building AI assistants that genuinely remember across sessions without cloud dependencies.

Building Self-Updating Knowledge Graphs from Meeting Notes

Estimated read time: 8 min

For a different take on structured knowledge persistence, this tutorial demonstrates using CocoIndex to extract entities from meeting notes into Neo4j as a queryable knowledge graph. The architecture features incremental processing where only modified documents trigger LLM extraction, extending naturally to research papers and support tickets.

The opportunity: Relationship-based queries unlock insights impossible with traditional document search, letting you ask questions like “what tasks did Sarah get assigned across all meetings?”

On-Device LLMs: State of the Union 2026

Estimated read time: 20 min

Shifting from cloud architectures to edge deployment, this comprehensive guide documents deploying billion-parameter models directly on mobile devices. Memory bandwidth, not compute, is the primary bottleneck. The industry converges on 4-bit quantization achieving 4x memory reduction with minimal quality loss. Start with Llama 3.2 or Gemma 3 in GGUF format.

Why now: Privacy requirements, latency sensitivity, and cost optimization are driving real production use cases for on-device inference, making this guide immediately practical for mobile developers.

TOOLS

Kimi K2.5: Visual Agentic Intelligence

Estimated read time: 5 min

Kimi K2.5 introduces video-to-code capability that reconstructs websites from video input through vision-text joint pre-training. The model orchestrates up to 100 parallel sub-agents across 1,500 coordinated tool calls, reducing execution time by 4.5x compared to sequential approaches.

What’s interesting: Video-to-code represents a genuinely new input modality for development, letting you record a UI walkthrough and generate functional implementations directly.

Mistral Vibe 2.0: Terminal-Native Coding Agent

Estimated read time: 4 min

Also in the coding agent space, Mistral released a major update to its terminal-native coding agent powered by the Devstral 2 model family. Key features include custom subagents for PR reviews and test generation, slash-command skills for common workflows, and multi-choice clarifications that prompt with options instead of guessing.

Key point: The unified agent modes let you configure custom tool and permission combinations without switching tools, reducing context-switching friction during development sessions.

Loom: Rust-Based AI Coding Agent

Estimated read time: 3 min

Taking a different implementation approach, Loom is an AI-powered coding agent written in Rust providing a REPL interface for LLM-powered file system operations and code analysis. The modular architecture maintains clear boundaries between core abstractions, LLM providers, and tools. Note: explicitly marked as experimental research software.

Worth noting: For developers curious about agent internals, the Rust implementation offers a well-structured codebase to study how coding agents are architected under the hood.

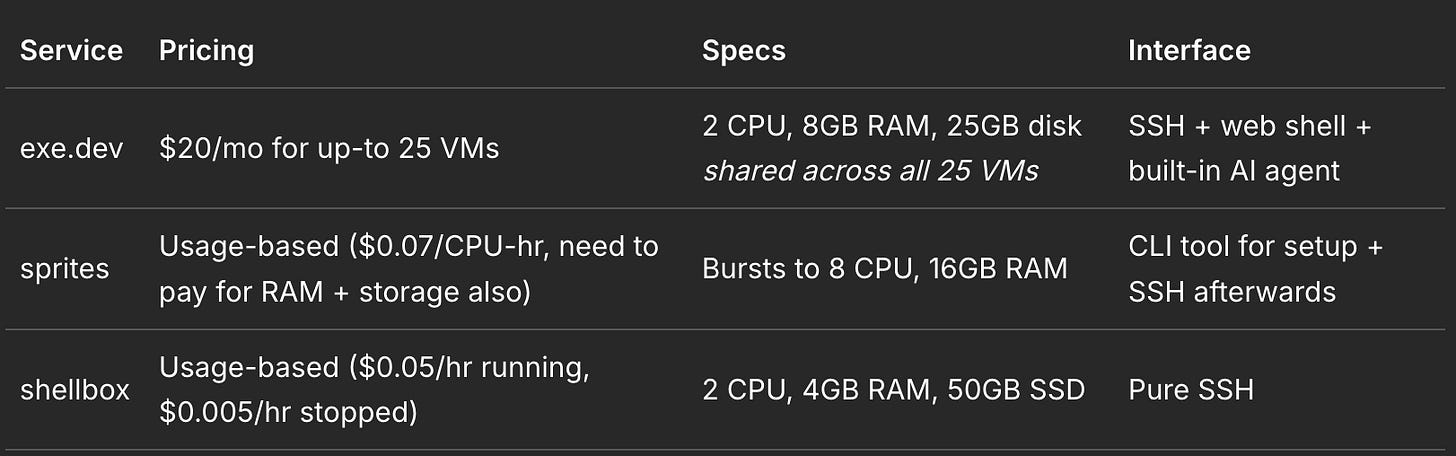

Sprites, exe.dev, and Shellbox: Cloud Dev Environments Compared

Estimated read time: 7 min

Building on our discussion of local VM isolation for Claude Code[1] last week, this comparison reviews three cloud alternatives each offering sandboxed environments optimized for AI coding agents. The appeal is speed: zero to running Claude Code in 1-2 minutes versus 20-30 with traditional VPS. exe.dev wins for solo developers; sprites targets enterprise teams.

[1] Running Claude Code Safely with VM Isolation

The context: Sandboxing matters for agent security. These services solve the real pain of getting isolated environments running quickly without wrestling with Docker or certificate configuration.

Agent Trace: Capturing the Context Graph of Code

Estimated read time: 6 min

As agents generate more code, tracking their contributions becomes essential. Cognition AI joins Cursor, Vercel, and others supporting Agent Trace, an open spec for recording AI contributions in version-controlled codebases. Git tracks line differences, but the real value is understanding why changes happened.

Why this matters: As AI-authored code grows, teams need audit trails beyond git blame. This emerging standard addresses attribution, context preservation, and the ability to learn from agent decisions.

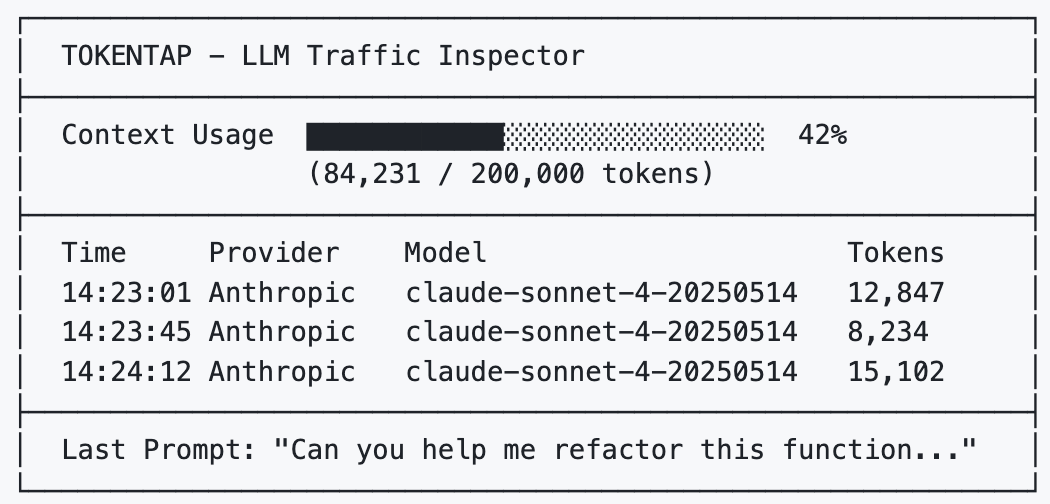

TokenTap: Real-Time LLM Token Dashboard

Estimated read time: 3 min

For monitoring the costs of all this agent activity, TokenTap is a terminal dashboard that intercepts LLM API traffic to visualize token usage in real-time. A color-coded fuel gauge shows context window usage, and every intercepted prompt saves as markdown and JSON for debugging.

The takeaway: Understanding where your tokens go helps optimize prompts and catch runaway context windows before they become expensive surprises during development sessions.

FastMCP 3.0: The Standard MCP Framework

Estimated read time: 5 min

FastMCP 3.0 enters beta as the standard framework for building MCP applications, downloaded a million times daily with some version powering 70% of MCP servers. The framework builds on three abstractions: Components, Providers, and Transforms. LLM-friendly docs available via MCP server or llms.txt format.

Why now: If you’re building tools that LLMs can invoke, MCP is becoming the dominant protocol. FastMCP dramatically reduces the boilerplate needed to expose your Python functions as agent-callable tools.

Qwen3-Max-Thinking: Alibaba’s Frontier Reasoning Model

Estimated read time: 5 min

Alibaba releases Qwen3-Max-Thinking, a flagship reasoning model comparable to GPT-5.2-Thinking and Claude-Opus-4.5 across 19 benchmarks. Key innovation: adaptive tool-use that autonomously invokes Search, Memory, and Code Interpreter during conversations. The OpenAI-compatible API also works with Claude Code via Anthropic protocol compatibility.

The opportunity: Another frontier reasoning model accessible via standard APIs expands your options for agent workflows, and the Claude Code compatibility means you can swap it in without tooling changes.

NEWS & EDITORIALS

AI Tribalism

Estimated read time: 5 min

Nolan Lawson argues that LLM debates have devolved into tribal politics rather than reasoned technical discourse. Having shifted from skepticism to 90% of his code authored by Claude Code, he advocates experimentation over entrenchment. Something changed in 2025 that made AI assistance genuinely valuable for reducing manual busywork.

The perspective: A thoughtful voice encouraging curiosity over ideology. Multi-agent systems suggest AI capabilities are compounding rather than hitting fundamental limitations.

The 80% Problem in Agentic Coding

Estimated read time: 8 min

But higher AI adoption brings new challenges. Addy Osmani explores how the real challenge is “comprehension debt” from reviewing code faster than understanding it. Teams with high AI adoption merged 98% more PRs but experienced 91% longer review times. Developers thriving are defining success criteria and letting agents iterate.

Key insight: The uncomfortable truth is this resembles management more than traditional programming, a useful frame for thinking about how developer roles are evolving.

AI Assistance May Reduce Coding Skills

Estimated read time: 6 min

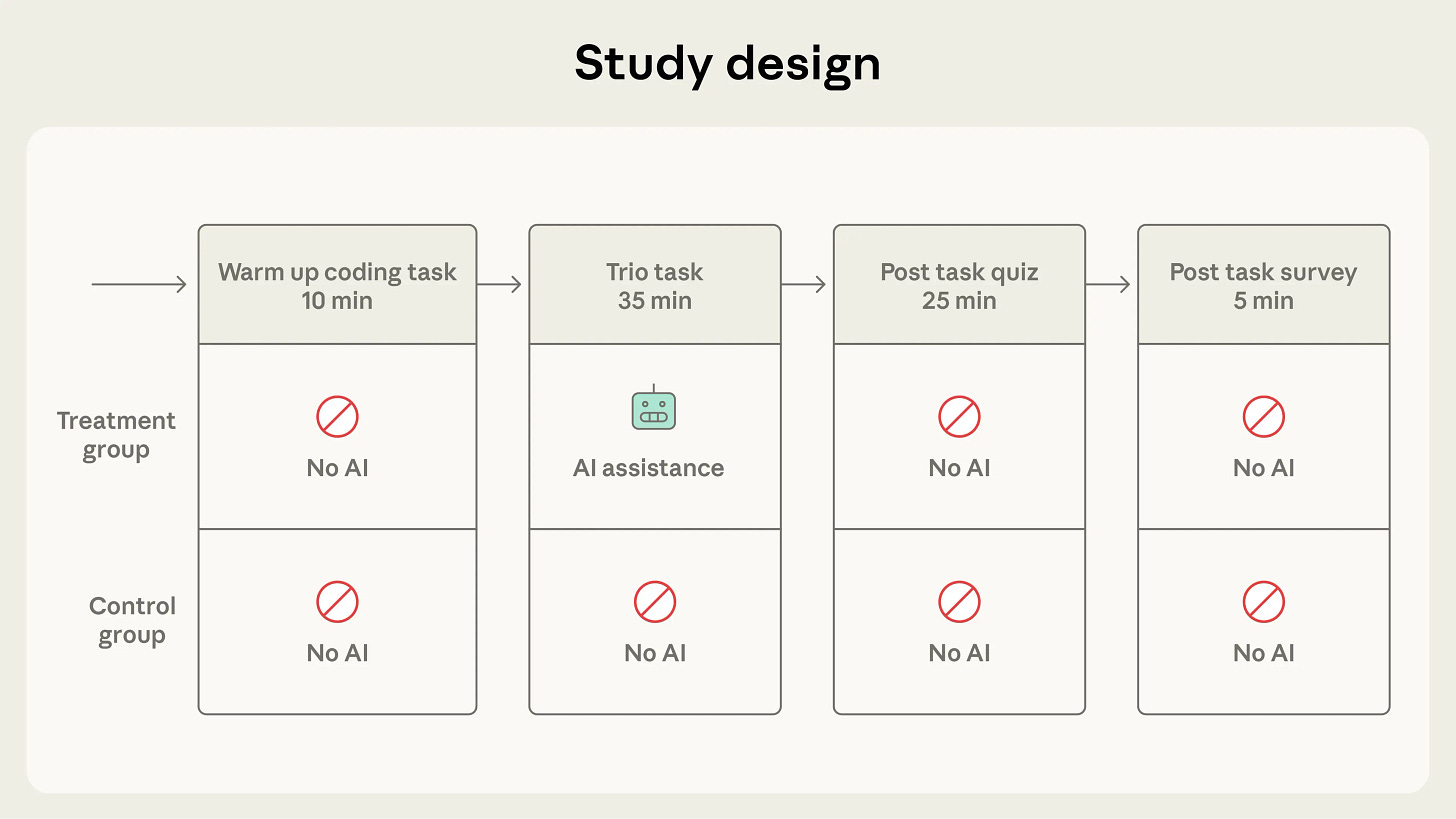

Research supports these concerns. Anthropic researchers found that AI tools substantially reduce learning outcomes in a randomized trial with 52 junior engineers. The AI group scored 17% lower on comprehension quizzes. Critical finding: high performers used AI strategically, requesting explanations alongside code generation.

The nuance: The difference between “cognitive offloading” and strategic AI use matters enormously. Asking for explanations alongside code generation correlates with better skill retention.

Code is Cheap

Estimated read time: 7 min

These shifts point to a fundamental inversion. Kailash Nadh argues that code is now cheap while articulation and architectural thinking have become genuinely valuable. When tasks requiring months compress into hours, the bottleneck shifts from typing syntax to problem definition. Clear articulation skills become the differentiator.

The reframe: Competent developers with strong communication skills benefit tremendously from AI assistance, while the ability to define problems precisely becomes more valuable than raw coding speed.

Couldn't agree more. The question of what skills matter is becoming more urgent with these advancements. What does this mean for curriculum development, ensuring future generations are truly equpped?